A while back I was working on some audio prototyping. I made a simple audio player prototype and was experiencing with different audio plugin APIs. I decided to use libAV (not libavcodec from ffmpeg) for the simple reason that its’ documentation is much much better than ffmpeg. I highly recommend to take advantage of that. There are many examples, code snippets and explanations here. Of course, API documentation is a bit dry when you first start using a library, so here is a tutorial and a good base you can use to build upon.

I will be using QT5 for the interface part, as it rocks. I also used libAO, the main reason being I based my prototype on an older ffmpeg example that used it. There shouldn’t be much issue in switching from libAO to PortAudio, for example. But for my current purpose, libAO is extremely simple and lets me focus on libAV and other issues.

Setting up the project and interface in QT

A short video on creating the interface, getting everything ready and explanation of some basic concepts.If you don’t feel like listening to my beautiful french accent, here is a short resume.

You’ll need a project with 3 cpp files and 3 headers: main.cpp, mainwindow.cpp, audioplayer.cpp and mainwindow.h, audioplayer,h and global.h. You need to link your project with libAV and libAO libraries. Finally, you’ll need a menu item to open a file. A label and 2 buttons, to play and stop the audio file.

Simple main window

You should now have a basic QT project ready, with the auto-generated main.cpp and the necessary files. Lets get our main window up and running. This will deal with opening the audio file, and starting/stoping the audio player class. You should have created some slots for our Open… menu item and for the play/stop button.

mainwindow.h

#pragma once

#include <QMainWindow>

#include <string>

#include <vector>

#include "audioplayer.h"

#include "globals.h"

namespace Ui {

class MainWindow;

}

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

explicit MainWindow(QWidget *parent = 0);

~MainWindow();

private slots:

void on_actionOpen_triggered();

void on_play_clicked();

void on_stop_clicked();

private:

Ui::MainWindow *ui;

AudioPlayer mainOut;

};There aren’t many surprises here, just your basic main window, a few slots, and the AudioPlayer class which will take care of reading/decoding/resampling and playing audio files.

mainwindow.cpp

#include "mainwindow.h"

#include "ui_mainwindow.h"

#include <QDebug>

#include <QMessageBox>

#include <QFileDialog>

MainWindow::MainWindow(QWidget *parent) :

QMainWindow(parent),

ui(new Ui::MainWindow)

{

ui->setupUi(this);

}

MainWindow::~MainWindow()

{

delete ui;

}

/* Open file dialogue. */

void MainWindow::on_actionOpen_triggered()

{

QString fileName = QFileDialog::getOpenFileName(this,

tr("Open File"),

getenv("HOME"),

// tr("Wave File (*.wav)"));

tr("All (*.*)"));

if (!fileName.isEmpty()) {

ui->filename->setText(QFileInfo(fileName).fileName());

qDebug() << fileName;

mainOut.setFile(fileName);

}

}

/* Start audio playback. */

void MainWindow::on_play_clicked()

{

mainOut.start();

}

/* Stop audio playback. */

void MainWindow::on_stop_clicked()

{

mainOut.stop();

}I use the QFileDialog::getOpenFileName to generate a platform specific Open File dialogue. I commented out an example of filters you can use for file types. You would probably want to get the libAV supported files and filter with that. There are a lot of supported formats. In the actionOpen_triggered method, you need to use the name of your label to set the filename (mine was named “label”).

The 2 button slots are in charge of playing and stopping the AudioPlayer.

globals.h

#pragma once

const double sample_rate = 48000.0;It is always a good idea to put your global variables in a header file, even if it seems overkill for a single constant.

The actual AudioPlayer magic

I have tried to comment the code as much as possible (maybe even too much), so most of it should be self explanatory. You can definitely watch the QT video, as I go through some basic audio programming concepts. I set up all the required libAV contexts to prepare everything. Some of it is direct copy/paste from their examples, as coding 20 error checks isn’t that interesting.

I then use an std::thread for the actual reading and playback of the file. I believe this is an elegant way to go about it. This frees up your software to do other calculations while playing audio.

audioplayer.h

#pragma once

#include <QObject>

#include <QDebug>

#include <atomic>

#include <chrono>

#include <memory>

#include <thread>

#include <ao/ao.h>

#include "globals.h"

extern "C" {

#include <libavresample/avresample.h>

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libavutil/opt.h>

#include <libavutil/mathematics.h>

}

class AudioPlayer : public QObject

{

Q_OBJECT

public:

AudioPlayer();

~AudioPlayer();

void setFile(QString& s);

void start();

void stop();

private:

void setupResampler();

void readAudioFile();

AVFormatContext* audio_context_ = NULL;

AVCodecContext* codec_context_ = NULL;

AVCodec* codec_ = NULL;

AVAudioResampleContext* resample_context_ = NULL;

ao_device* outputDevice_ = NULL;

ao_sample_format outputFormat_;

std::atomic<bool> readingFile_;

std::thread fileRead_;

};We need quite a few contexts for this to work as intended (playing back any format to our device). The atomic bool is in charge of stopping the thread.

audioplayer.cpp

#include "audioplayer.h"

/* Initialize libAV and libAO. Set the physical device's parameters. */

AudioPlayer::AudioPlayer() :

readingFile_(false)

{

// LibAV initialisation, do not forget this.

av_register_all();

// Libav network initialisation, for streaming sources.

avformat_network_init();

// libAO initialisation, https://xiph.org/ao/doc/ao_example.c

ao_initialize();

// Ask kindly for the default OS playback device and get

// an info struct from it. We will use it to set the

// preferred byte format.

int default_driver = ao_default_driver_id();

ao_info* info = ao_driver_info(default_driver);

// Prepare the device output format. Note that

// this has nothing to do with the input audio file.

memset(&outputFormat_, 0, sizeof(outputFormat_));

outputFormat_.bits = 16; // Could be 24 bits.

outputFormat_.channels = 2; // Stereo

outputFormat_.rate = sample_rate; // We set 48KHz

outputFormat_.byte_format = info->preferred_byte_format; // Endianness

outputFormat_.matrix = 0; // Channels to speaker setup. Unused.

// Start the output device with our desired format.

// If all goes well we will be able to playback audio.

outputDevice_ = ao_open_live(default_driver, &outputFormat_,

NULL /* no options */);

if (outputDevice_ == NULL) {

fprintf(stderr, "Error opening device.\n");

return;

}

}

/* Cleanup */

AudioPlayer::~AudioPlayer()

{

// Don't forget to join the thread.

readingFile_ = false;

if (fileRead_.joinable()) {

fileRead_.join();

qDebug() << "Joined";

}

if (audio_context_ != NULL)

avformat_close_input(&audio_context_);

if (resample_context_ != NULL)

avresample_close(resample_context_);

ao_close(outputDevice_);

ao_shutdown();

}

/* Try to load and check if the audio file is supported.

Audio headers are sometimes damaged, misinformed or non-existant,

so we will try to read a bit of the file to figure out the codec.

LibAV is awesome like that.

Example https://libav.org/doxygen/master/transcode_aac_8c-example.html */

void AudioPlayer::setFile(QString &s)

{

// Make sure the audio context is free.

if (audio_context_ != NULL)

avformat_close_input(&audio_context_);

// Try and open up the file (check if file exists).

int error = avformat_open_input(&audio_context_, s.toStdString().c_str(),

NULL, NULL);

if (error < 0) {

qDebug() << "Could not open input file." << s;

audio_context_ = NULL;

return;

}

// Read packets of a media file to get stream information.

if (avformat_find_stream_info(audio_context_, NULL) < 0) {

qDebug() << "Could not find file info.";

avformat_close_input(&audio_context_);

return;

}

// We need an audio stream. Note that a stream can contain

// many channels. Some formats support more streams.

if (audio_context_->nb_streams != 1) {

qDebug() << "Expected one audio input stream, but found"

<< audio_context_->nb_streams;

avformat_close_input(&audio_context_);

return;

}

// If all went well, we have an audio file and have identified a

// codec. Lets try and find the required decoder.

codec_ = avcodec_find_decoder(audio_context_->streams[0]->codec->codec_id);

if (!codec_) {

qDebug() << "Could not find input codec.";

avformat_close_input(&audio_context_);

return;

}

// And lets open the decoder.

error = avcodec_open2(audio_context_->streams[0]->codec,

codec_, NULL);

if (error < 0) {

qDebug() << "Could not open input codec_.";

avformat_close_input(&audio_context_);

return;

}

// All went well, we store the codec information for later

// use in the resampler setup.

codec_context_ = audio_context_->streams[0]->codec;

qDebug() << "Opened" << codec_->name << "codec_.";

setupResampler();

}It is important to note this is only the basics of audio_context initialization. A few edge cases are missing. For example, many formats support multiple audio streams, this code doesn’t. We also assume we are opening a standard audio file with audio data on stream 0. This is a bad assumption and is left as an exercise to the reader to fix :)

/* Set up a resampler to convert our file to

the desired device playback. We set all the parameters we

used when initialising libAO in our constructor. */

void AudioPlayer::setupResampler()

{

// Prepare resampler.

if (!(resample_context_ = avresample_alloc_context())) {

fprintf(stderr, "Could not allocate resample context\n");

return;

}

// The file channels.

av_opt_set_int(resample_context_, "in_channel_layout",

av_get_default_channel_layout(codec_context_->channels), 0);

// The device channels.

av_opt_set_int(resample_context_, "out_channel_layout",

av_get_default_channel_layout(outputFormat_.channels), 0);

// The file sample rate.

av_opt_set_int(resample_context_, "in_sample_rate",

codec_context_->sample_rate, 0);

// The device sample rate.

av_opt_set_int(resample_context_, "out_sample_rate",

outputFormat_.rate, 0);

// The file bit-dpeth.

av_opt_set_int(resample_context_, "in_sample_fmt",

codec_context_->sample_fmt, 0);

// The device bit-depth.

// FIXME: If you change the device bit-depth, you have to change

// this value manually.

av_opt_set_int(resample_context_, "out_sample_fmt",

AV_SAMPLE_FMT_S16, 0);

// And now open the resampler. Hopefully all went well.

if (avresample_open(resample_context_) < 0) {

qDebug() << "Could not open resample context.";

avresample_free(&resample_context_);

return;

}

}We need a resampler, since we don’t want to limit ourselves to certain audio formats. Many tutorials skip this part, which is kinda crazy when you think about it. With this setup, your prototype is ready to open any combination of audio format and sample rate there is.

/* If all of the above went according to plan, we launch a

thread to read and playback the file. */

void AudioPlayer::start()

{

// We are currently reading a file, or something bad happened.

if (readingFile_ || audio_context_ == NULL)

return;

// Thread wasn't shutdown correctly.

if (!readingFile_ && fileRead_.joinable())

fileRead_.join();

qDebug() << "Start";

readingFile_ = true;

// Launch the thread.

fileRead_ = std::thread(&AudioPlayer::readAudioFile, this);

}

/* Stop playback, join the thread. */

void AudioPlayer::stop()

{

qDebug() << "Stop";

readingFile_ = false;

if (fileRead_.joinable()) {

fileRead_.join();

qDebug() << "Joined";

}

}Here is the actual thread set up and manipulation.

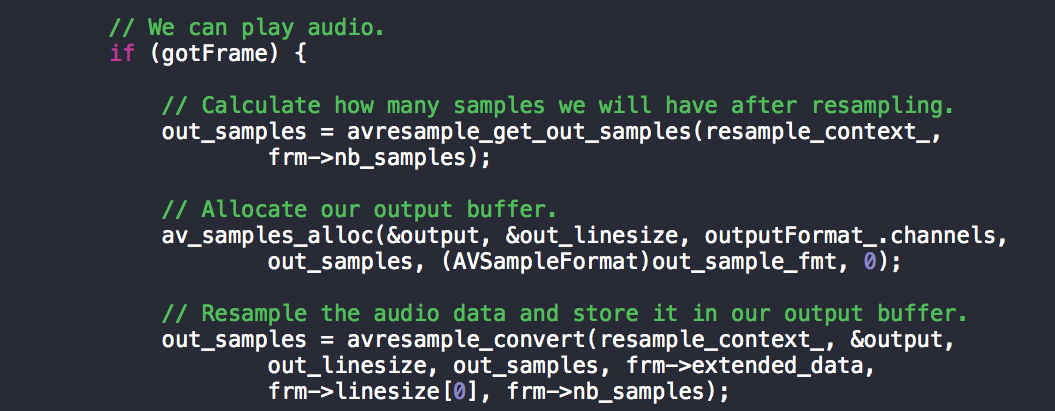

/* Actual audio decoding, resampling and reading. Once a frame is

ready, copy the converted values to libAO's playback buffer. Since

you have access to the raw data, you could hook up a callback and

modify/analyse the audio data here. */

void AudioPlayer::readAudioFile()

{

// Start at the file beginning.

avformat_seek_file(audio_context_, 0, 0, 0, 0, 0);

uint8_t *output; // This is the audio data buffer.

int out_linesize; // Used internally by libAV.

int out_samples; // How many samples we will play, AFTER resampling.

int64_t out_sample_fmt; // Bit-depth.

// We need to use this "getter" for the output sample format.

av_opt_get_int(resample_context_, "out_sample_fmt", 0, &out_sample_fmt);

// Initialize all packet values to 0.

AVPacket pkt = { 0 };

av_init_packet(&pkt);

AVFrame* frm = av_frame_alloc();

// Loop till file is read.

while (readingFile_) {

int gotFrame = 0;

// Fill packets with data. If no data is read, we are done.

readingFile_ = !av_read_frame(audio_context_, &pkt);

// Len was used for debugging purpose. Decode the audio data.

// Put that in AVFrame.

int len = avcodec_decode_audio4(codec_context_, frm, &gotFrame,

&pkt);

// We can play audio.

if (gotFrame) {

// Calculate how many samples we will have after resampling.

out_samples = avresample_get_out_samples(resample_context_,

frm->nb_samples);

// Allocate our output buffer.

av_samples_alloc(&output, &out_linesize, outputFormat_.channels,

out_samples, (AVSampleFormat)out_sample_fmt, 0);

// Resample the audio data and store it in our output buffer.

out_samples = avresample_convert(resample_context_, &output,

out_linesize, out_samples, frm->extended_data,

frm->linesize[0], frm->nb_samples);

// This is why we store out_samples again, some issues may

// have occured.

int ret = avresample_available(resample_context_);

if (ret)

fprintf(stderr, "%d converted samples left over\n", ret);

// DEBUG

// printf("Finished reading Frame len : %d , nb_samples:%d buffer_size:%d line size: %d \n",

// len,frm->nb_samples,pkt.size,

// frm->linesize[0]);

// Finally, play the raw data using libAO.

ao_play(outputDevice_, (char*)output, out_samples*4);

}

free(output);

}

// Because of delays, there may be some leftover resample data.

int out_delay = avresample_get_delay(resample_context_);

// Clean it.

while (out_delay) {

fprintf(stderr, "Flushed %d delayed resampler samples.\n", out_delay);

// You get rid of the remaining data by "resampling" it with a NULL

// input.

out_samples = avresample_get_out_samples(resample_context_, out_delay);

av_samples_alloc(&output, &out_linesize, outputFormat_.channels,

out_delay, (AVSampleFormat)out_sample_fmt, 0);

out_delay = avresample_convert(resample_context_, &output, out_linesize,

out_delay, NULL, 0, 0);

free(output);

}

// Cleanup.

av_frame_free(&frm);

av_free_packet(&pkt);

qDebug() << "Thread stopping.";

}Finally, we read the file and pass on the data to libAO for playback on the selected physical device. This is were you would hook up an EQ, a visualisation algorithm and any other method that needs access to the raw audio data.

A major pain-point was figuring out the correct number of samples and converting that to libAO’s desired buffer. After a lot of trouble shooting I have nailed a value that works for me. If you hear glitches, crackles, slow-motion or any other audible defects, I highly recommend you record the audio and open it up in an audio editor. Zoom in to sample level and analyse what is really going on. Are you missing samples? Is there a conversion issue? Are your buffers being “cut”? It is very hard to identify a problem simply by listening to it.

This concludes my first-ever programming tutorial. I really hope this is useful to you, either in understanding how audio works, or just as a starting point for a great application. I would love to hear any thoughts/comments and critics. Also, please link to any project that uses this :)

Have a great time with libAV!

The full project is available on my github. Feel free to clone it, but it doesn’t include compilation instructions for Windows.